Deep Tech: AI Based Animal Detection Demo App for iPhone

In this post, I describe an animal recognition demonstration app I developed for the iPhone. The “MegaDetector-Demo” app uses the latest “MegaDetector” animal detector model from PyTorch-Wildlife to identify animals, people and vehicles in a live video feed from the iPhone camera. The demo app is available as an Xcode project for Apple developers on my GitHub site Here. I hope this basic app will encourage more practical uses of edge-based AI tech in trail cameras.

I am grateful for Zhongqi Miao at PyTorch-Wildlife for his work on MegaDetector, and for encouraging me to build this demo app. I (and many others) are indebted to Microsoft AI for Earth and the Caltech Resnick Sustainability Institute and the developers of MegaDetector.

Background

In the last 10 years, reliable computer-based recognition of objects within high resolution images has gone from being “impossible” to “ubiquitous.” Computational neural network models which can be “trained” on vast collections of images now readily available, and which operate efficiently on high speed computer systems made this remarkable transition possible.

Trail Camera Applications for AI Image Detection

Image detection technology first intersected camera-trapping as a way of dealing with the “data deluge” many researchers and organizations face when trying to extract information on animal presence and population from easy-to-install camera-trap networks.

Early attempts to use standard detectors on trail camera photos fared poorly. It turned out to be a challenging technical problem to recognize animals in trail camera images. Such photos are often devoid of animals (false triggers). When animals are present, they are often not centered in the photo, or under poor lighting conditions, or in the presence of intervening vegetation. Contrast this with photos taken by humans which comprise the training set for standard detectors. Images often contain the animal of interest; the animal is often framed prominently in the photo; the lighting is good; and the animal is not obscured by vegetation.

MegaDetector Model

The MegaDetector model was trained specifically on a vast collection of human-annotated trail camera photos. Its aim was to provide human-level accuracy in detecting and locating animals within single images from trail cameras placed any where in the world. This was an enormous technical task. For those interested in the techniques developed, please check out the references at the end of this post.

Since its inception, MegaDetector has gone through several iterations. Each iteration has improved on the accuracy of the model while simultaneously requiring less computation. When I first encountered MegaDetector in 2019, it required a high performance ~300W server with a state-of-the-art GPU to run, and detected animals in trail camera photos at a rate of about two images per second.

PyTorch-Wildlife is the organization now responsible for maintaining and extending MegaDetector. In the summer of 2024, they released the Beta version of MegaDetector V6c based on YOLOv9c. YOLOv9c is a model designed to be small enough to run in small “edge” devices, like smart phones, and cameras. I found that this model runs easily in an iPhone13 and iPhone 15, detecting animals, people, and vehicles in images at rate of 30 frames per second (with an average inference latency of about 25 ms) , while consuming an incremental ~2.5 Watts of power.

Change in MegaDetector Energy Efficiency

From 2019 to 2024, the efficiency of MegaDetector, which we can measure in “Joules/Inference,” has gone from ~150 Joules/Inference to 80 mJ/Inference — a factor of ~1800! The actual ratio is a little lower, given that the earlier MD5 model operates at higher resolution. Still, such a vast improvement in computational efficiency portends a broadening of MegaDetector usage models. One obvious new usage model is in processing videos — increasingly used in trail camera settings — rather than single images. Running MegaDetector in an “edge” setting, possibly as part of the trail camera image capture pipeline itself is another promising usage model, and the focus of the MD-Demo app.

MD-Demo App

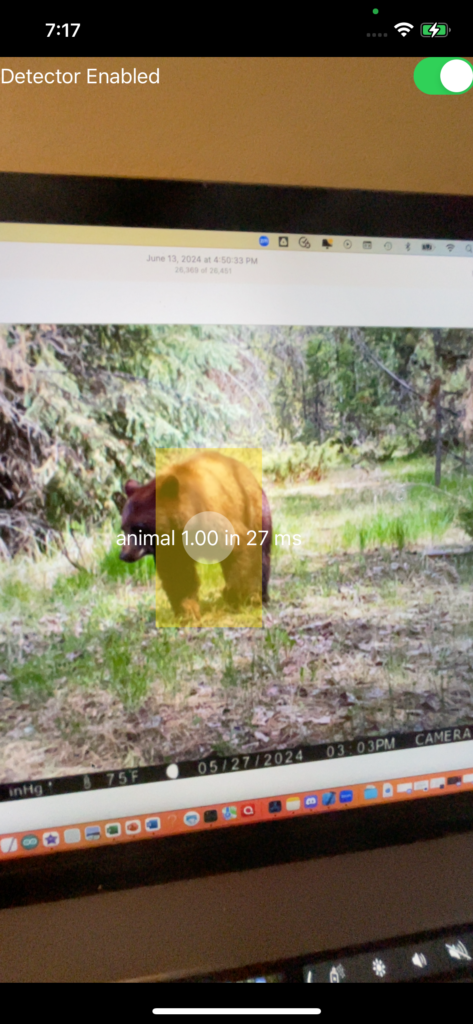

The MD-Demo App is a simple demonstration of the MegaDector-V6c model operating on an iPhone. The app displays a live video feed on the iPhone screen. The MegaDetector model runs on every frame. The app draws a shaded box around any animals, people, or vehicles detected, and displays this box on the screen superimposed over the video preview. The shaded box includes a central region, which could be used for future autofocus feature, text fields which identify the object class (“animal”, “person”, “vehicle”) as well as the time taken for each object detection in milliseconds.

I also added a toggle switch in the top right of the screen to enable or disable the MegaDetector inference engine. This is what I used (in conjunction with a USB power monitor) to calculate the incremental power consumed by MegaDetector-V6c.

How MD-Demo App Works

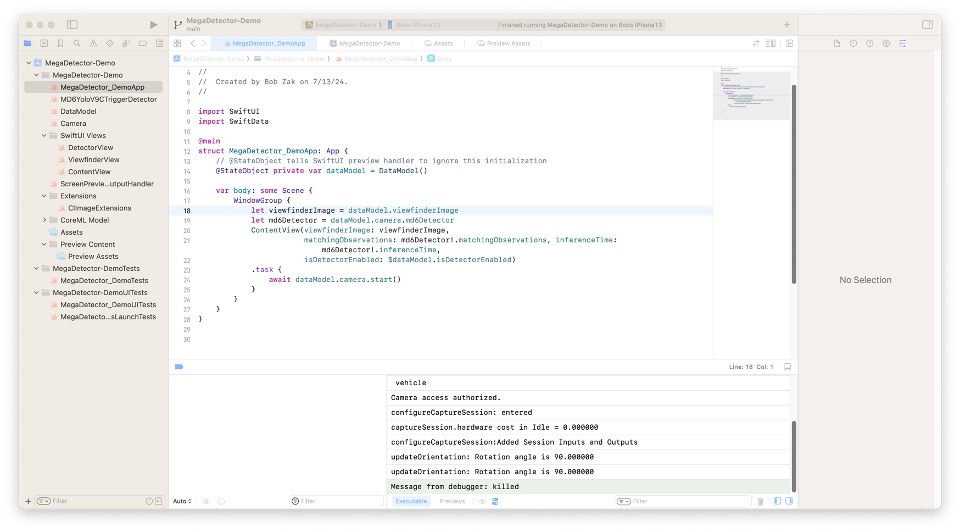

This is a simple technology demo application which I provide to those interested in conservation tech, MegaDetector, and “edge-based” applications, as reference. Hopefully, it inspires others to build other applications based on this technology.

Here is the basic application flow:

- Opens up the front facing camera

- Uses CoreML library to load and initialize the the MegaDetectorV6C model

- Creates a stream of images from the video feed

- Displays the image stream on the video feed from the camera on the iPhone screen

- Passes the each frame from the video feed to a “Core ML” version of the MegaDetectorV6C model

- Displays the detection results on the screen, along with a central “focus” circle, and text superimposed on the video feed

Tools

I wrote the application in Swift, and uses “SwiftUI” for all screen display, and the “Core ML” library (with others). I developed and tested this app in Xcode 16. I’ve tested on an iPhone 13 and iPhone15Pro.

Possible Uses for new MegaDetector V6 Model

The iPhone app is really just a technology demonstration. Here are some ideas on how this tech might be made more useful, specifically focused on edge applications.

Smart Trigger

As part of a “smart trigger” device for trail cameras, to replace or augment PIR detectors. As a standalone system, we could run this model all the time, and trigger when an animal is detected. Even at 80mJ/inference, this is a lot of power. Alternatively, one could run the model only after the PIR sensor triggers a new image and discard (or at least categorize) apparent false triggers.

Low Bandwidth Image Digests

It’s currently very expensive to send full resolution video over cellular, or especially, globally accessible satellite, networks. MegaDetector and other similar models could be deployed with the camera to automatically assess, rank image quality, and possibly identifying key target species. This much-compressed “digest” of captured images could be sent via expensive cell/satellite communications. The digest could even identify specific high quality, or highly relevant images/videos which might justify cost of cell/satellite upload.

On Camera Pan/Zoom

Modern smart phone cameras often have both a wide angle, and narrow angle camera (or cameras). MD6 could be used to figure out which one to use, depending on the animal’s location in front of the camera. This would be better than post-production pan/zoom, since it would give full optical resolution at wide and narrow views.

Feedback

Please let us know if you use this demo project, and what other projects it may inspire in comments below.

References

There are many online tutorials and projects that deal with processing a video feed and using the Vision AI library in Xcode. I’ve borrowed shamelessly from these, including:

- Apple Xcode Project “Capturing Photos Camera Preview“

- Apple Xcode Project: “Debugging Your Machine Learning Model“

MegaDetector has a long history, which you can read about in these references

- Pytorch-Wildlife: Organization that currently develops and maintains MegaDetector models as part of a broader mission to provide AI-based tools for conservation organizations and researchers.

- Beery, Sara, Dan Morris, and Siyu Yang. “Efficient pipeline for camera trap image review.” arXiv preprint arXiv:1907.06772(2019).

- Norouzzadeh MS, Morris D, Beery S, Joshi N, Jojic N, Clune J. A deep active learning system for species identification and counting in camera trap images. Methods Ecol Evol. 2021;12:150–161.

- Beery, Sara. “The MegaDetector: Large-Scale Deployment of Computer Vision for Conservation and Biodiversity Monitoring.”

Very interesting, I’m installing EcoAssist and Gradio here on my PC.

Nowadays some bushnell trail cameras already have A.I. in them, see below.

https://www.simmonssportinggoods.com/bushnell-core-4ks-true-target/

Sincerely,

Erik

Thanks for the pointer. This is a good application. May have to give this camera a try, if only to try it out.

My first test with the EcoAssist software.

I did it on a folder with 99 video files.

I configured EcoAssist to only use MegaDetector 5a without identifying the animals.

I selected the option in the software to copy the files into subfolders to test.

The software created three folders.

https://i.postimg.cc/g2RDvczV/99-files.jpg

– animal – 71 files – 70 files with animals and one file without an animal

– empty – 24 files – all without animals

– person – 4 files – all correct, in the video only the records of my legs.

Table generated by the software

https://we.tl/t-ndye8NNkTv

I used 120 frames as the configuration to analyze, which would be the first two minutes of the video, the videos have 60 fps.

I think I’ll change it to at least the first 5 seconds, 300 frames, to have a greater margin of safety.

Of course, it’s always good to take a look to see if the software has done everything right.

I would like to thank you, Mr. Robert, for introducing this technology to everyone on your blog. It will certainly help a lot in separating false triggers from video recordings of trail cameras.

Sincerely,

Erik from Brazil.

Thanks for the pointer and info on EcoAssist. As with other applications of AI these days, it’s hard to see keep track of all the new tools coming out.

-bob

Thanks for sharing. I noticed that my SPYPOINT cameras have AI filters. The cameras come standard with a “buck” filter and users have the option to purchase filters for half a dozen other wildlife species. I don’t have enough “captures” to find it useful at this time. Maybe in the future.

That’s cool. I expect the trail camera companies will continue to develop these based on the photos being uploaded from their cell cams. It’s a good business model. Do you know if the filter is applied at the camera, vs. in the cloud? (i.e. does the filter prevent non-bucks from being uploaded; or are all photos uploaded, but only bucks are shown).

Thanks for the info!

“Buck Tracker” is A.I. image recognition software that works via the smartphone’s SPYPOINT app on photos taken by SPYPOINT cellular cameras. When your camera captures a photo, BUCK TRACKER automatically scans it for recognizable species features (such as antlers) and categorizes it with a special tag in your photo gallery.

The app separates buck from doe and will send you an alert on your phone. If you pay more (I do not) you gain access to eight species filters:

• Buck

• Antlerless Deer

• Turkey

• Coyote

Moose

• Wild Boar

• Bear

• Human Activity

The AI scan can also identify human activity.

Buck Tracker has been around for a while https://www.youtube.com/watch?v=nNCL6nXSjPg

I’m not a huge fan of Spypoint Cameras but the tech they continually modify in their cameras has an appeal to the techie in me. Just yesterday the firmware in n my camera was remotely updated and features such as “scheduling” and “instant photo” where the camera sends a photo on command. I waited until the camera went on sale for $50 and picked one up. I get 100 cell photos free each month which is enough to stay entertained. Note: I am not recommending these cameras.

Buck Tracker for Deer is free.